I still remember the frantic phone call late one Tuesday evening. It was Sarah, our Head of Sales, her voice tight with panic. "The system’s down, Mark! We can’t process orders. Everything’s frozen!" My stomach dropped. "The system" was our shiny new cloud-based ERP, the very digital heart of our entire operation. Every sale, every inventory movement, every financial transaction flowed through it. And now, it was flatlining.

That night was a blur of hurried calls, frantic troubleshooting, and the sinking feeling of helplessness. We were essentially blind. We knew something was wrong, but what exactly? Was it the network? The database? An integration with our shipping provider? We were reacting, scrambling, and losing precious business by the minute. It took hours to diagnose a subtle integration failure that had cascaded into a full system lockout. By then, the damage was done, and the trust in our "always-on" cloud ERP had taken a serious hit.

That experience, painful as it was, was a wake-up call. It was the moment I realized that simply having an ERP in the cloud wasn’t enough. We needed eyes and ears on it, a continuous, vigilant watch. We needed ERP cloud application monitoring.

For those of you just dipping your toes into this world, let me simplify things. An ERP, or Enterprise Resource Planning system, is like the central nervous system for a company. It connects all the different departments – sales, finance, inventory, HR – so they can share information and work together seamlessly. When we talk about "cloud ERP," we mean that this powerful system isn’t sitting on servers in your office basement anymore. Instead, it’s hosted and managed by a third-party provider over the internet. It’s like moving from owning your own house to renting a fully serviced apartment. You still get all the benefits, but someone else handles the plumbing and electricity.

The promise of cloud ERP is incredible: scalability, reduced IT overhead, automatic updates, and access from anywhere. But here’s the kicker, and this is where my story truly begins: just because it’s in the cloud doesn’t mean it’s magic. It doesn’t mean problems vanish. In fact, the complexity can sometimes increase because you’re dealing with multiple layers of technology and often, multiple vendors. And that’s precisely why ERP cloud application monitoring isn’t just a nice-to-have; it’s absolutely essential. It’s your radar, your dashboard, and your early warning system all rolled into one.

After that disastrous Tuesday, my team and I embarked on a mission. We had to understand what was really happening inside our cloud ERP. We needed to shift from being reactive firefighters to proactive guardians. Our goal was simple: know about a problem before Sarah called, before customers were impacted, before the business suffered.

Our first step was understanding what we needed to watch. It wasn’t just about whether the system was "on" or "off." It was far more nuanced. We identified several key areas, what I like to call the "pillars" of effective ERP cloud application monitoring:

The Performance Pulse: Is Our System Fast Enough?

Imagine walking into a grocery store where the checkout line moves at a snail’s pace, or the shelves are constantly empty. That’s what a slow ERP feels like to your employees. Performance monitoring is all about tracking the speed and efficiency of your application. We started looking at things like transaction response times – how long did it take for a sales order to save? How quickly did an inventory report generate? What about user login times?

We realized that even if the system was technically "up," if it was sluggish, it was still impacting productivity and morale. Our monitoring tools began to reveal bottlenecks. We discovered a particular custom report that, when run, would hog resources and slow down the entire finance module. We found specific database queries that were taking too long. With this data, we could work with our cloud provider or internal teams to optimize, fine-tune, or even redesign parts of our ERP processes. It felt like giving our digital heart a stress test and then prescribing the right exercise regimen. We used application performance monitoring (APM) solutions to dive deep, looking at CPU usage, memory consumption, and even individual code execution paths. It was like becoming a digital detective, piecing together clues to understand why things were lagging.

The Availability Alert: Is Anyone Home?

This pillar is probably the most straightforward: is the system accessible? Can users log in and do their work? But even this isn’t as simple as it sounds. "Available" for one user might not mean "available" for another, especially if they’re in different geographical locations or on different network segments.

Our monitoring strategy here focused on constant uptime checks, not just of the main login page, but of critical business processes. We set up what are called "synthetic transactions." These are automated scripts that mimic a real user’s journey: logging in, creating an order, saving it, logging out. If any step in that sequence failed, or took too long, we’d get an immediate alert. This was crucial because it allowed us to detect issues even before a human user might notice them. Remember that frantic call from Sarah? With availability monitoring in place, we aimed to be the ones calling her to say, "Hey, we’re seeing a slight hiccup in the order processing, but we’re already on it," rather than the other way around. It gave us back a sense of control and significantly reduced the "panic factor" during incidents.

The User Experience Lens: What Do Our People Actually See?

This was a fascinating area of discovery for us. We had all these technical metrics – CPU usage, database query times, network latency – and they might all be showing green lights. But then, a user would complain, "The system feels slow today," or "It keeps freezing when I try to do X." There was a disconnect.

We learned that technical metrics don’t always tell the full story of what a real person experiences. User experience (UX) monitoring bridged this gap. It meant looking at things from the perspective of the actual end-user. How long did it take for a page to load in their browser? Were they encountering specific errors when clicking certain buttons? Were they able to complete their workflows without interruption?

We deployed real user monitoring (RUM) tools that collected data directly from our users’ browsers and devices. This gave us invaluable insights. We found that while our main data center was performing perfectly, users in a remote office were experiencing slow load times due to a local network bottleneck, something our server-side monitoring wouldn’t have caught. This insight allowed us to address the specific problem for those users, improving their productivity and satisfaction. It’s about empathy in IT – understanding that your system’s health is ultimately measured by how well it serves the people who use it every day.

The Security Shield: Guarding Our Digital Vault

With our ERP in the cloud, security became an even more critical concern. We were entrusting our most sensitive business data to a third party, and while they had their own robust security measures, we still needed our own layer of vigilance. Security monitoring isn’t just about firewalls; it’s about constant watchfulness for unusual activity.

We started collecting and analyzing logs from our ERP and its surrounding infrastructure. We looked for things that were out of the ordinary: multiple failed login attempts from a strange IP address, unusual data access patterns, modifications to critical configurations outside of scheduled maintenance windows. It’s like having a digital security guard patrolling the premises 24/7, looking for anything suspicious.

This also extended to compliance. Many industries have strict regulations about data privacy and security. Monitoring helps us demonstrate that we’re adhering to those standards, providing audit trails and evidence of our security posture. It’s a constant battle, a continuous game of cat and mouse with potential threats, but having robust security monitoring gives us peace of mind that we’re doing everything we can to protect our valuable data.

The Integration Interceptor: When Systems Talk (or Don’t)

Modern ERPs rarely live in isolation. They are typically integrated with dozens, if not hundreds, of other applications: CRM systems, e-commerce platforms, payment gateways, supply chain tools, data warehouses. Remember that initial outage? It was an integration failure. When one link in this chain breaks, the entire process can grind to a halt.

Integration monitoring became a priority. We tracked the health of every connection point, every API call, every data transfer. Did the order from our e-commerce site successfully make it into the ERP? Did the inventory update from the warehouse reach the sales team? We set up alerts for failed API calls, data mismatches, or delays in data synchronization.

This was complex because it often involved multiple vendors and technologies. But by carefully monitoring these digital conversations between systems, we could quickly pinpoint exactly where a problem originated. It saved us countless hours of finger-pointing and allowed us to resolve issues much faster, ensuring that data flowed smoothly across our entire digital ecosystem.

The Tools of Our Trade: Our Monitoring Arsenal

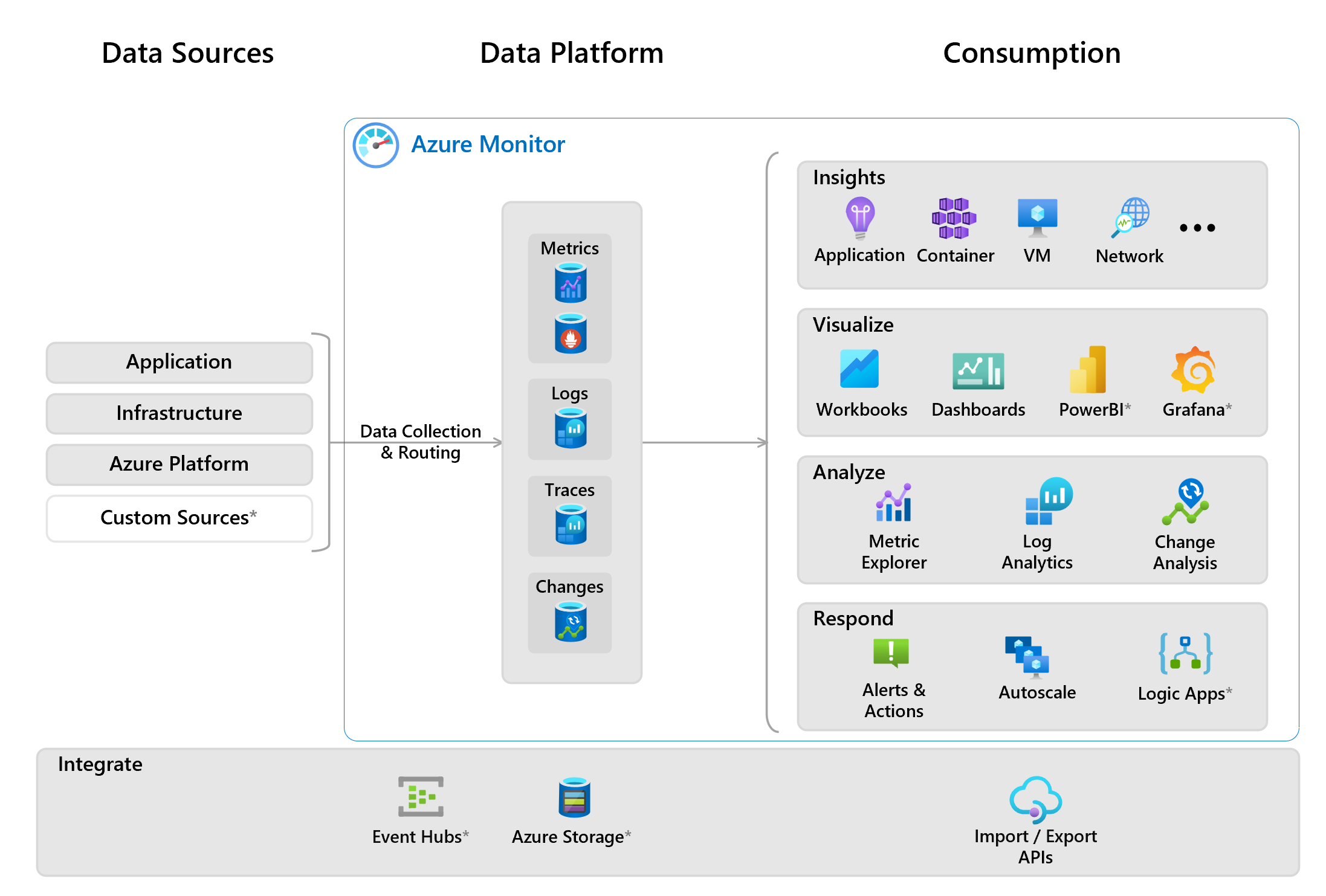

So, how did we achieve all this? We didn’t build it from scratch, of course. We leveraged a combination of tools and strategies:

- Dashboards: These became our command center, our digital cockpit. Customizable dashboards displayed key metrics in real-time – green for good, yellow for warning, red for critical. We could see the overall health of our ERP at a glance, drill down into specific modules, or check the performance for different user groups. It turned abstract data into actionable insights.